Context window: AI’s invisible UX challenge

AI ‘forgets’. How ChatGPT, Cursor, and Gemini are providing persistent context

AI’s next UX frontier: Continuity

AI tools are getting better at staying in sync with you: your past chats, your projects, your voice.

Behind that improvement is a shift from prompts to persistent context.

For product makers, this changes how we design experiences: not just responses, but relationships over time.

Why AI forgets: Context window constraint

AI models can only process a limited amount of text at a time, called the “context window”.

Today’s models can handle up to 1 million tokens (roughly 750k words or several novels).

Here’s the catch: that window resets with every new conversation. An AI can remember a whole book during one chat, but forget your product name in the next.

Real UX challenge: Context continuity

The core problem centers on what happens when the window closes. People don’t think in sessions or chats. They expect AI to remember things like a teammate would.

The question becomes: How do we sustain continuity after every reset?

Three approaches to persistent context

Each represents a distinct UX approach for maintaining continuity: by storing, structuring, or reconnecting context when needed. These often appear together because continuity is required across multiple levels of user interaction.

Three continuity archetypes

🤝 The Companion (e.g. ChatGPT’s Memory): Learns implicitly and builds personal continuity

⚙️ The Tool (e.g. Cursor Rules): Uses explicit settings to ensure consistent behavior

🗂️ The Librarian (e.g. Gemini + Workspace): Retrieves context from existing data when needed

How AI products are tackling the challenge

(Examples illustrate a primary case. Each product also mixes the others where it fits.)

❶ 🤝 The Companion: ChatGPT’s Memory

🔷 UX Approach: Learn implicitly, remember invisibly

ChatGPT’s Memory quietly tracks user’s writing style, repeated prompts, and preferences. When it learns something new, it shows a brief “Memory updated” notice.

✅ Why It Works:

Reduces cognitive load through quiet persistence

Provides clear controls to view or delete memories

Uses timely injection to create a sense of ongoing familiarity

⚖️ Trade-Off: Convenience vs. transparency (users may not know what triggered a memory)

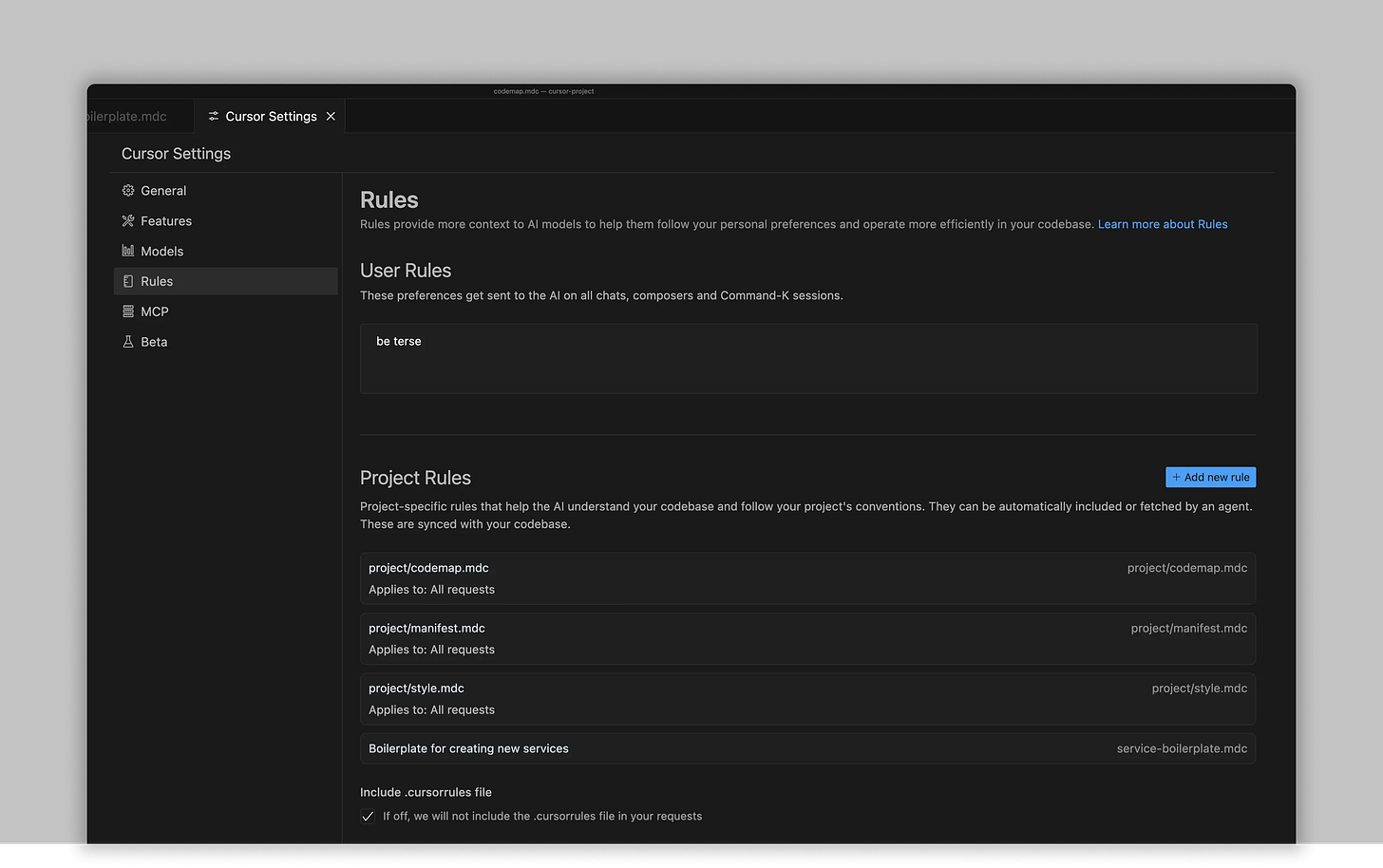

❷ ⚙️ The Tool: Cursor’s Rules

🔷 UX Approach: Nothing implicit, everything explicit

Cursor lets users create “Rules” files that define how the AI behaves. They act like shared guidelines that persist within the defined boundaries.

✅ Why It Works:

Keeps user’s mental model clean: “These rules belong to this project”

Prevents accidental crossover between workspaces

Reuses patterns without repeated setup, saving user effort

⚖️ Trade-Off: Control vs. setup effort (requires users to configure everything)

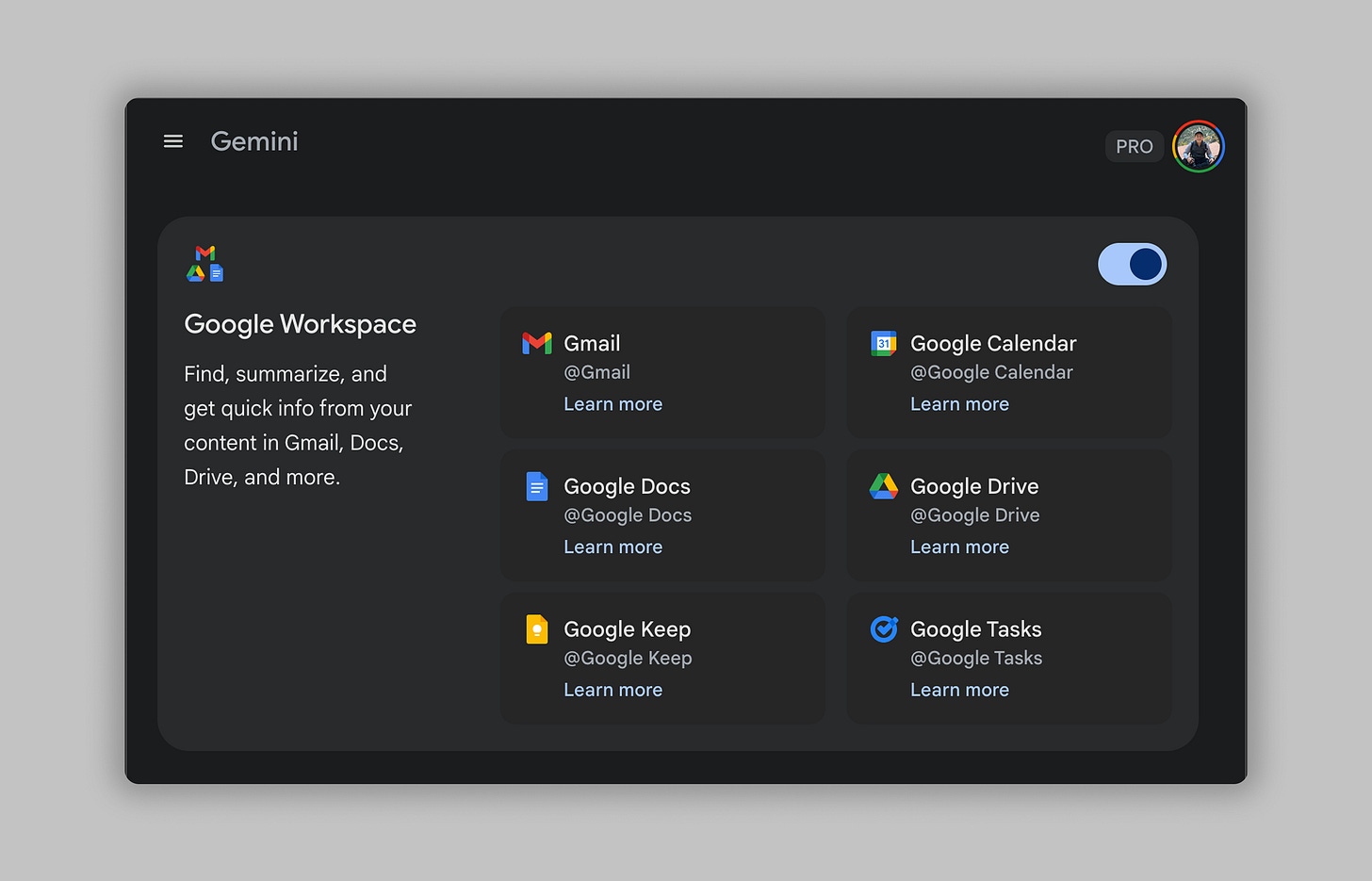

❸ 🗂️ The Librarian: Gemini + Workspace

🔷 UX Approach: Don’t remember, just reconnect

When a user asks a question, Gemini searches their Workspace in real time. Existing Docs, Sheets, and Gmail serve as its external memory.

✅ Why It Works:

Scales without expanding the model memory

Keeps outputs grounded in source-of-truth documents

Works with existing data organization without any new setup

⚖️ Trade-Off: Seamless access vs. data exposure (All-or-nothing based on Workspace permission)

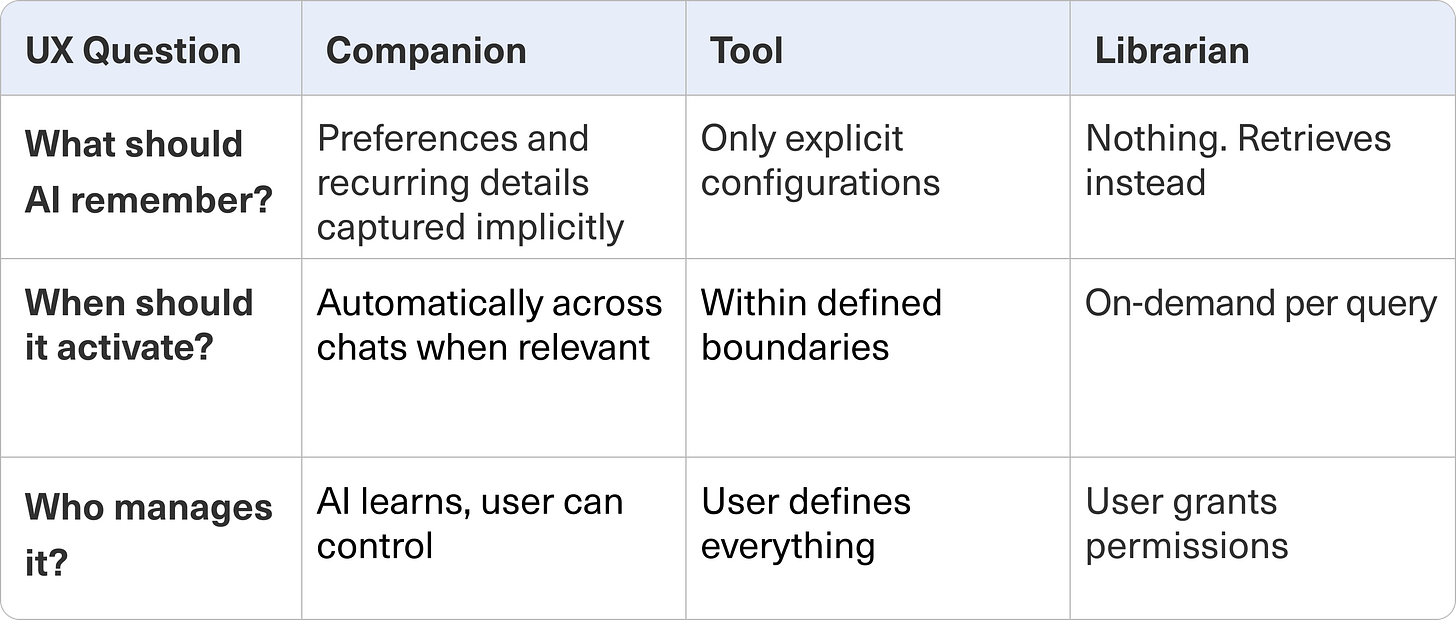

How each pattern answers key UX questions

Note: A feature can blend patterns. “Referencing past chats” sits between Companion and Librarian: implicit signals can trigger it, and the system retrieves from your past chats.

UX insights: Designing for continuity

✅ UX can solve the continuity problem

Users experience “forgetting” as friction. Even if a model resets every session, thoughtful design can preserve a sense of ongoing familiarity. Continuity is not about longer memory, it’s more about smarter context handoffs.

✅ Design how AI remembers

Each approach represents a different mental model:

Implicit feels personal (ChatGPT Memory)

Explicit feels structured (Cursor Rules)

Retrieval feels reliable (Gemini + Workspace)

✅ Keep context within reach

Users care less about whether AI “remembers” and more about whether it finds what matters. If full persistence isn’t feasible, design smooth reconnection to past data or context at the right moment.

Key takeaways

To design continuity in your AI product, ask these questions:

💡 What should AI remember? → Map what needs to persist

💡 When should it activate? → Set clear boundaries

💡 Who manages it? → Give users visibility and control

💡 How to implement? → Layer archetypes. Most products need more than one

As AI capabilities grow, context continuity will define which products feel natural to use. The challenge is to create AI products that maintain context in ways that users find natural and trustworthy.