UX Breakdown for AI Video Generation

From prompt gaps to cost anxiety: here's how to tackle UX challenges

Generative video is expanding its territory from memes to Netflix. The models are getting better with more realistic video and audio generation.

However, we see a lot of room for improvement in UX, because AI video generation is more complicated than text and image generation due to the inherent complexity of video.

Two forces making AI video generation clunky

1. Creative Complexity

Unlike generating text or an image, video demands control over dozens of variables: lighting, camera movement, pacing, audio, and more. Good UX must guide users through these choices without drowning them in controllers.

2. Resource Sensitivity

Every generation burns real money—credits and GPU minutes. Users need to balance quality against budget, while platforms need to prevent cost spirals.

Understanding the user journey

Essential UX features for AI video creation

❶ Inspire: Clarify what’s possible and spark concepts

What's needed: A gallery showcasing creative, out-of-the-box use cases

Why it matters: Users need to see what's possible beyond their imagination

Working examples: Sora and Midjourney lead with galleries on their landing pages, immediately showing the breadth of styles possible

❷ Initiate: Bridge the gap from simple prompts to creation-ready inputs

What's needed:

Smart prompt enhancement: Turns "dog running" into "golden retriever running toward camera through autumn leaves, wide angle, shallow depth of field"

Image-first creation for the starting frame before ‘animating’ the scene

Why it matters: Users often underestimate the context AI needs. Poor inputs cause disappointing outputs

Working examples:

Sora's Storyboard breaks complex ideas into scenes with separate enhanced prompts

Midjourney's text-to-image-then-animate workflow lets users validate the visual before committing to video generation

❸ Iterate: Enable guided exploration while managing costs

What's needed:

Directional controls that guide AI's creative randomness rather than promising precise edits

Transparent credit usage display

Why it matters: Users want to iterate but AI generation is inherently chaotic and expensive

Working examples:

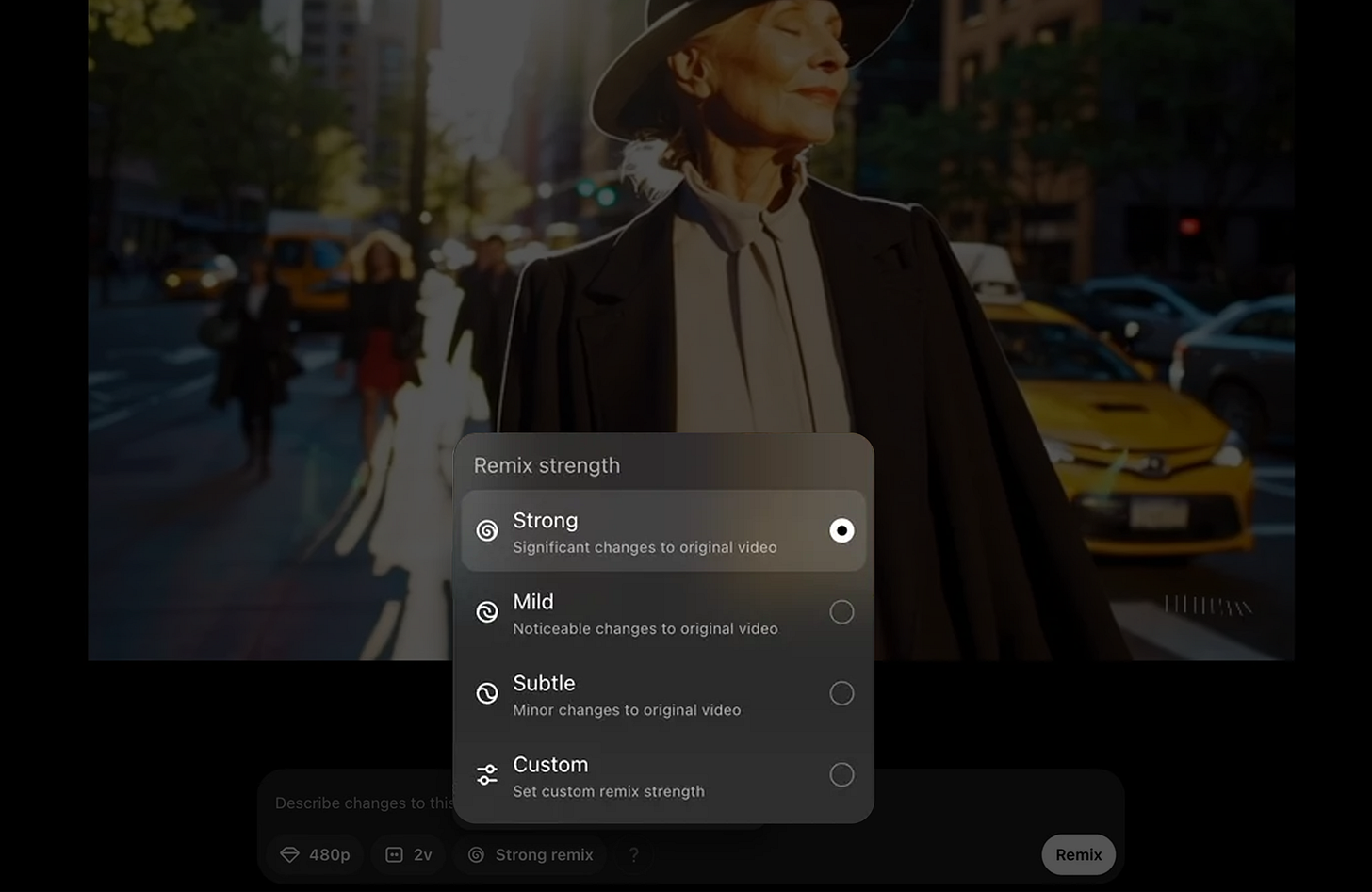

Sora's “Remix strength” setting allows users to control how significantly a video is altered

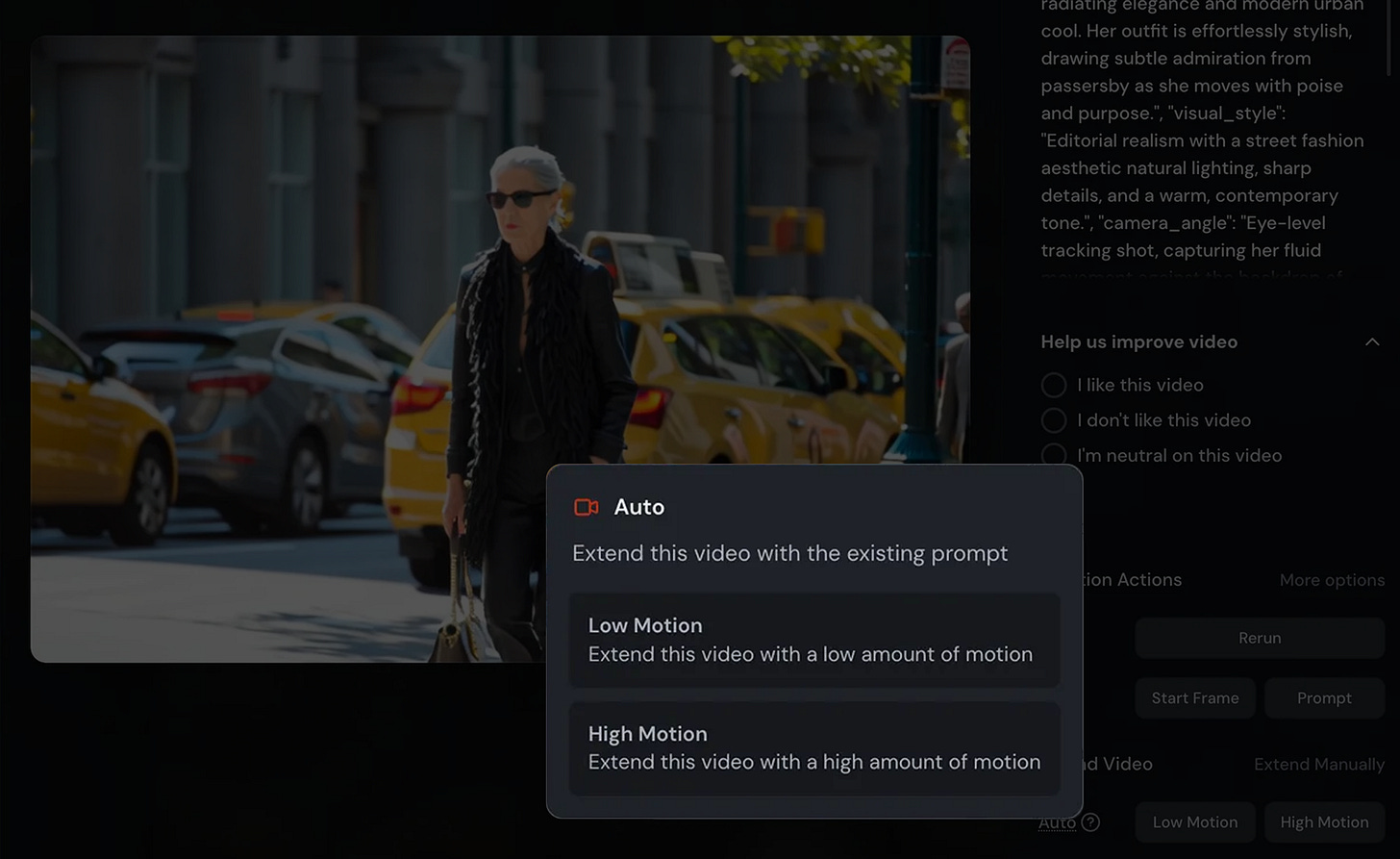

Midjourney's "Low/High motion" setting provides control over the degree of movement

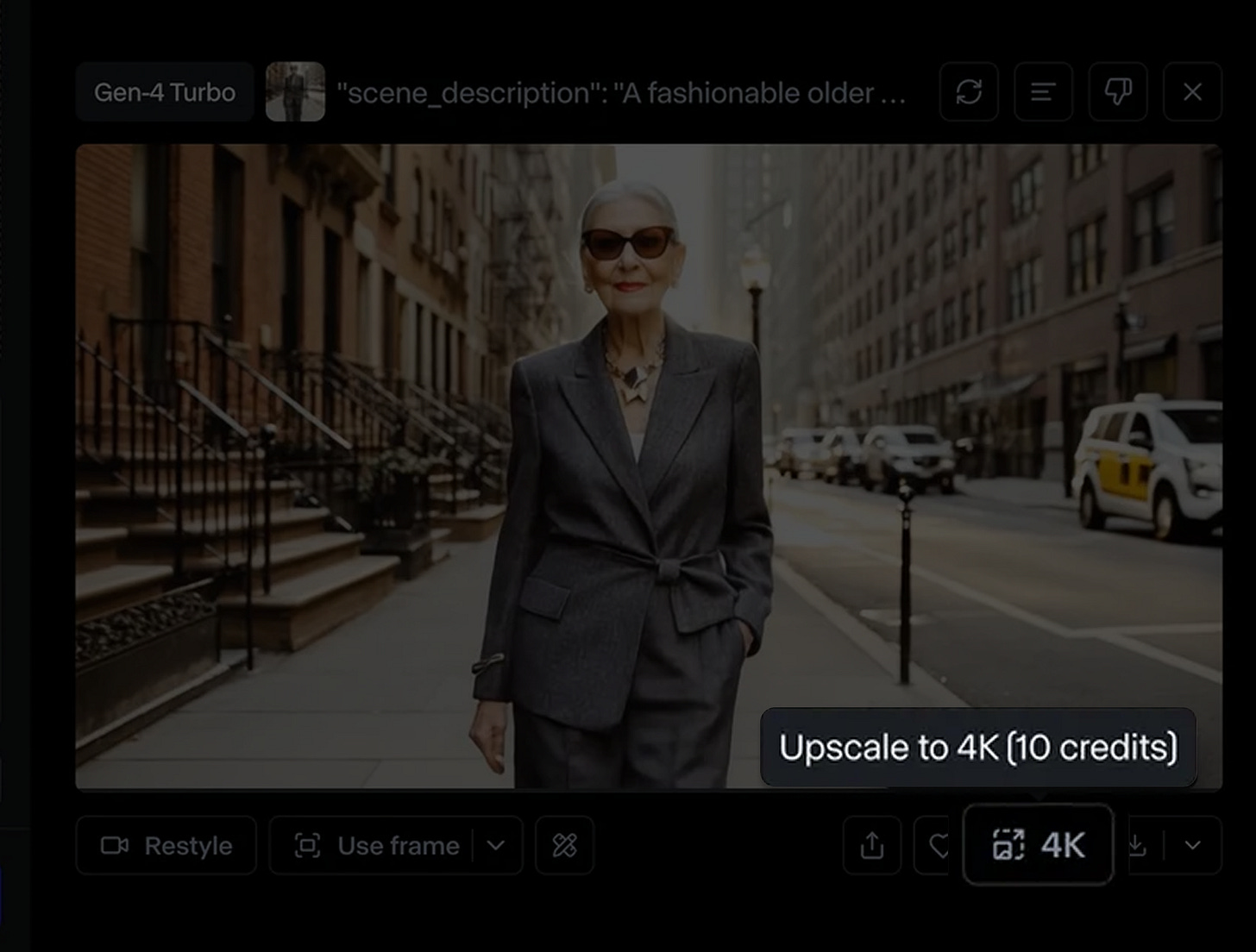

❹ Export: Ensure production-ready output

What's needed:

Video spec options including resolution upscaling and frame rates

Why it matters: Professional users need high quality

Working examples:

Runway's 4K upscaling separates draft exploration from final quality

Three Key UX insights for AI video generation

✅ Bridge the imagination gap early

The gap between "dog running" and cinema-quality output is huge. Successful UX helps users build creation-ready inputs through prompt enhancement and reference galleries.

✅ Make every dollar count visibly

Video generation burns real resources. Users need constant visibility into costs and smart ways to explore cheaply before committing to expensive final renders.

✅ Embrace creative unpredictability

AI video generation isn't precise editing, it's controlled randomness. Each generation is unique, which means traditional "undo/redo" mental models break down. UX should frame this as creative exploration, offering ways to guide randomness rather than fighting against AI's inherent variability.

Five UX questions when you are working on creative AI products

💡 Inspiration: Do users immediately understand the creative ceiling? Can new users quickly grasp what's possible with your tool?

💡 Input Quality: How well do you bridge from simple ideas to AI-ready prompts?

💡 Creative Expectations: How do we help users embrace AI's creative unpredictability?

💡 Cost Transparency: Can users explore ideas without fear of budget burnout?

💡 Professional Readiness: Does your output work in real-life professional settings?